bagging machine learning explained

Bagging is a powerful method to improve the performance of simple models and reduce overfitting of more complex models. Decision trees have a lot of similarity and co-relation in their.

Ensemble Learning Bagging Boosting Ensemble Learning Learning Techniques Deep Learning

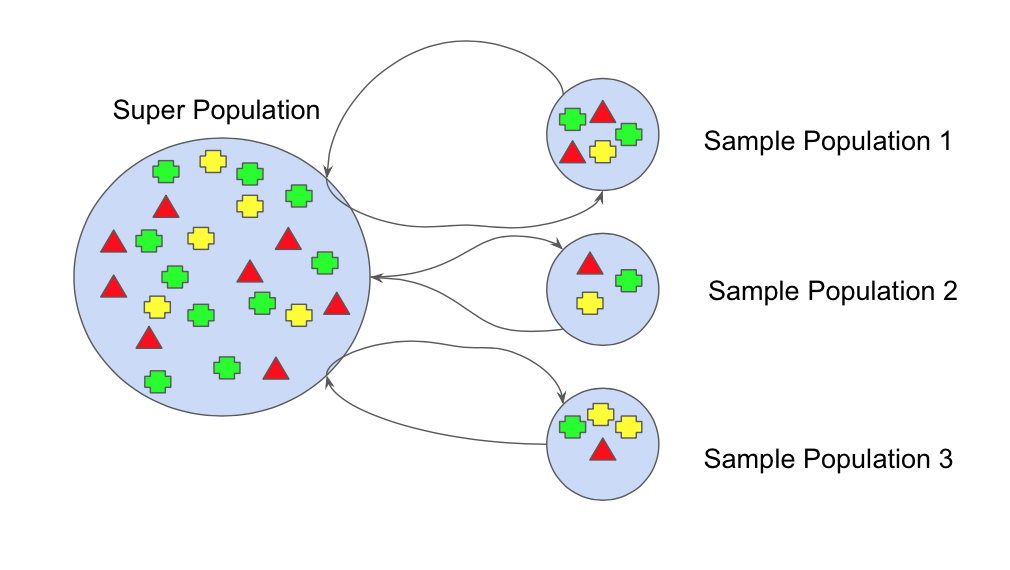

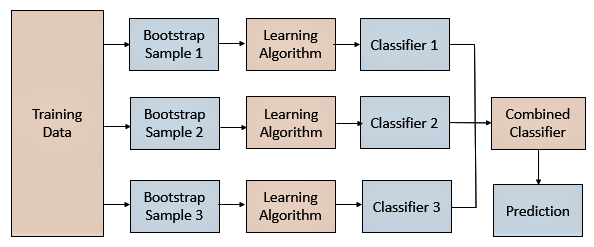

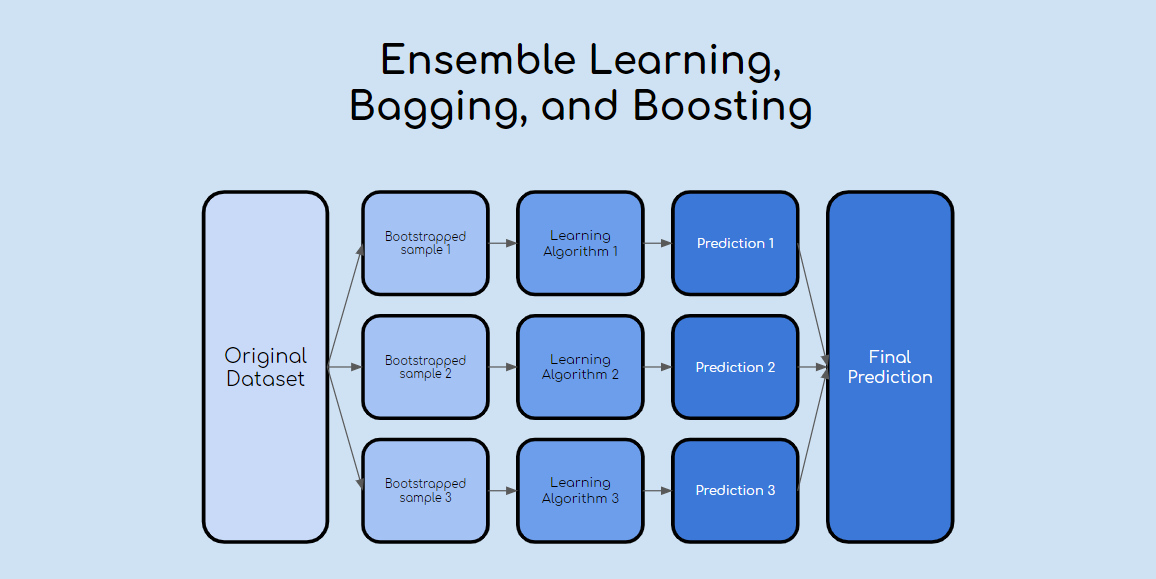

The samples are bootstrapped each time when the model is trained.

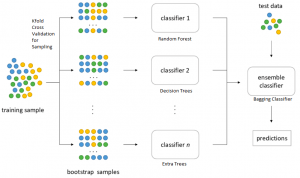

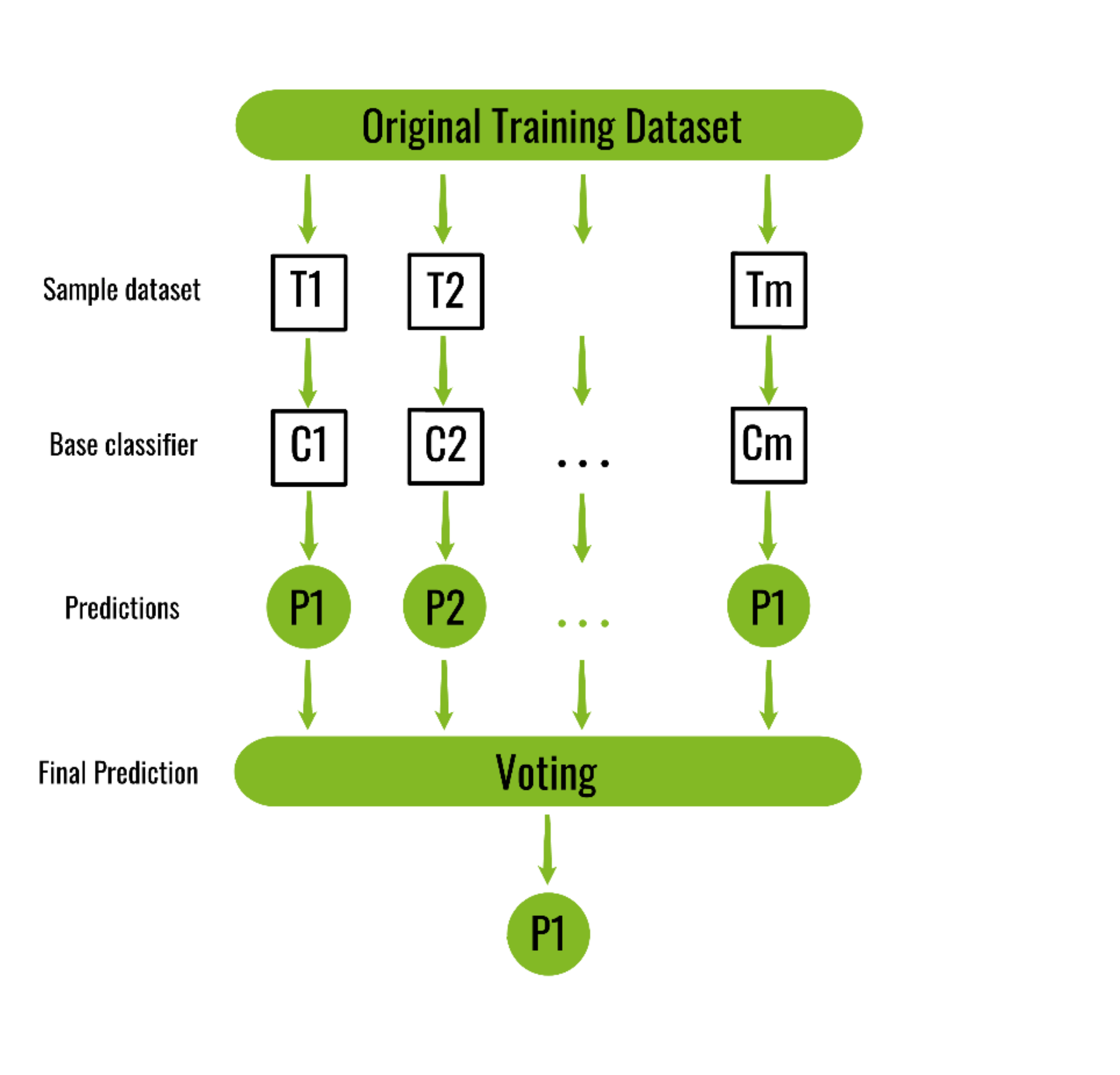

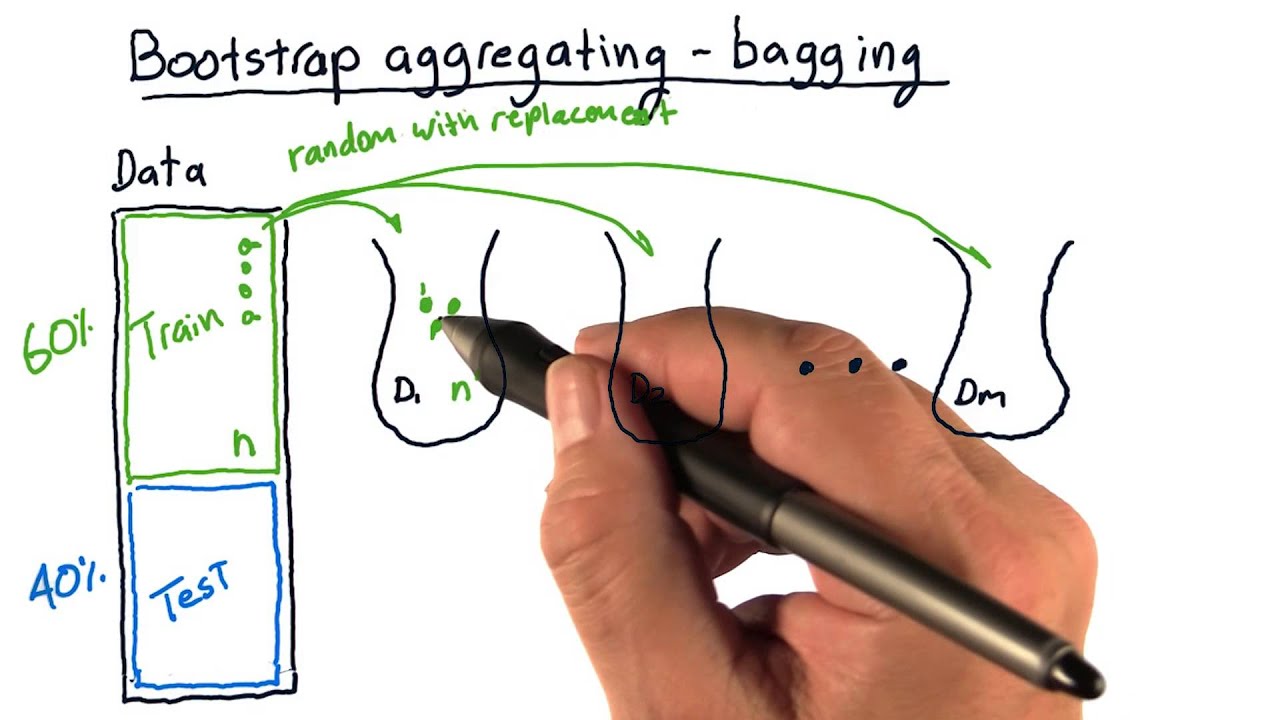

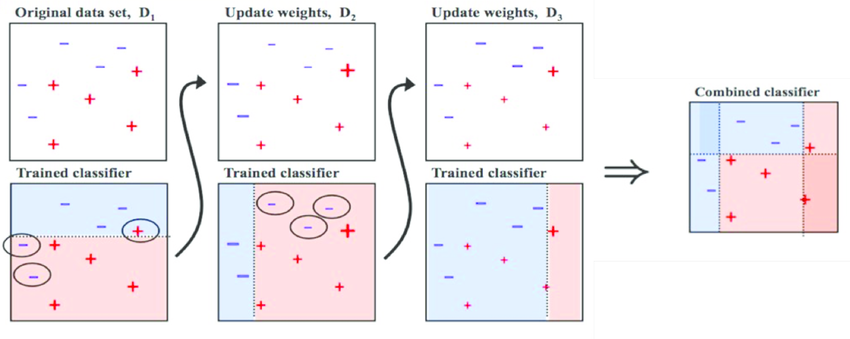

. As we have seen bagging is a technique that performs random samples with replacement to train n base learners this allows the model to be processed in parallel. Answer 1 of 16. B ootstrap A ggregating also known as bagging is a machine learning ensemble meta-algorithm designed to improve the stability and accuracy of machine learning algorithms.

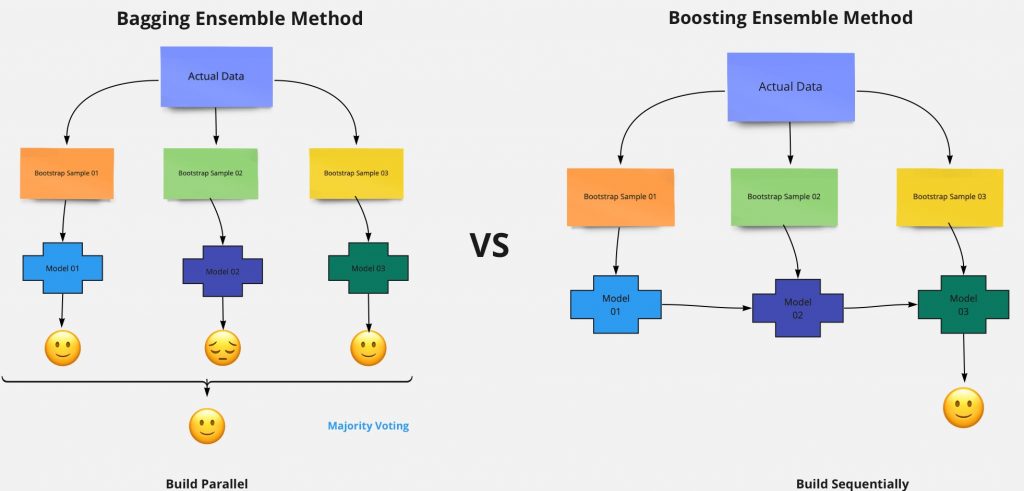

Bagging is a parallel method that fits different considered. Ensemble machine learning can be mainly categorized into bagging and boosting. Ad Learn key takeaway skills of Machine Learning and earn a certificate of completion.

Ad Machine Learning Refers to the Process by Which Computers Learn and Make Predictions. Bagging is used typically when you want to reduce the variance while retaining the bias. Bootstrapping in Bagging refers to a technique where multiple subsets are.

Ad Machine Learning Capabilities That Empower Developers to Innovate Responsibly. Bagging consists in fitting several base models on different bootstrap samples and build an ensemble model that average the results of these weak learners. Ad Andrew Ngs popular introduction to Machine Learning fundamentals.

Bagging stands for B ootstrap Agg regating or simply Bootstrapping Aggregating. Take your skills to a new level and join millions that have learned Machine Learning. Bagging also known as bootstrap aggregation is the ensemble learning method that is commonly used to reduce variance within a noisy dataset.

The bagging technique is useful for both regression and statistical classification. Bagging is the application of the Bootstrap procedure to a high-variance machine learning algorithm typically decision trees. In bagging a random sample.

The bias-variance trade-off is a challenge we all face while training machine learning algorithms. It is the technique to use. This happens when you average the predictions in different spaces of the input.

Last Updated. Bagging is a powerful ensemble method which helps to reduce variance and by extension. Bagging is an acronym for Bootstrap Aggregation and is used to decrease the variance in the prediction model.

20 May 2019 A Bagging classifier is an ensemble meta-estimator that fits base classifiers each on random subsets of the original dataset and then aggregate. Download the 5 Big Myths of AI and Machine Learning Debunked to find out. Boosting should not be confused with Bagging which is the other main family of ensemble methods.

Bagging can be used with any machine learning algorithm but its particularly useful for decision trees because they inherently have high variance and bagging is able to. As seen in the introduction part of ensemble methods bagging I one of the advanced ensemble methods which improve overall performance by sampling random. Bagging and Boosting are the two popular Ensemble Methods.

Bagging is the application of Bootstrap procedure to a high variance machine Learning algorithms usually decision trees. While in bagging the weak learners are trained in parallel using randomness in. So before understanding Bagging and Boosting lets have an idea of what is ensemble Learning.

Lets assume we have a sample dataset of 1000. Get a look at our course on data science and AI here. Ad The 5 biggest myths dissected to help you understand the truth about todays AI landscape.

The principle is very easy to understand instead of. Bagging a Parallel ensemble method stands for Bootstrap Aggregating is a way to decrease the variance of the prediction model by generating additional data in the training. Learn More About Machine Learning How It Works Learns and Makes Predictions at HPE.

Given a training dataset D x n y n n 1 N and a separate test set T x t t 1 T we build and deploy a bagging model with the following procedure. Bagging which is also known as bootstrap aggregating sits on top of the majority voting principle.

A Bagging Machine Learning Concepts

Bagging Classifier Instead Of Running Various Models On A By Pedro Meira Time To Work Medium

Bagging Classifier Python Code Example Data Analytics

Ensemble Learning Bagging Boosting Stacking And Cascading Classifiers In Machine Learning Using Sklearn And Mlextend Libraries By Saugata Paul Medium

Ml Bagging Classifier Geeksforgeeks

Bootstrap Aggregating Wikiwand

Guide To Ensemble Methods Bagging Vs Boosting

Bagging And Boosting Explained In Layman S Terms By Choudharyuttam Medium

Bootstrap Aggregating Bagging Youtube

Bagging Bootstrap Aggregation Overview How It Works Advantages

Mathematics Behind Random Forest And Xgboost By Rana Singh Analytics Vidhya Medium

What Is Bagging In Machine Learning And How To Perform Bagging

Learn Ensemble Methods Used In Machine Learning

A Primer To Ensemble Learning Bagging And Boosting

Bagging Vs Boosting In Machine Learning Geeksforgeeks

Bagging Vs Boosting In Machine Learning Geeksforgeeks

Bagging Ensemble Meta Algorithm For Reducing Variance By Ashish Patel Ml Research Lab Medium

Boosting And Bagging Explained With Examples By Sai Nikhilesh Kasturi The Startup Medium

Ensemble Learning Bagging And Boosting Explained In 3 Minutes